Lifelong Machine Learning

Workshop timing will be announce soon..

Workshop Speaker

Name: Dr. Muhammad Taimoor Khan

Designation: Assistance Professor (FAST NU)

Introduction

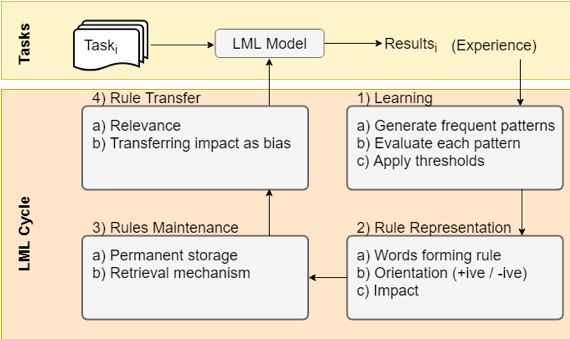

Lifelong Machine Learning (LML) models have continuous learning capability as they accumulate knowledge, while moving from one task to another. This approach is very suitable for Large-scale data that has multiple tasks where each task has a dataset. The model learns new knowledge from each task performed and retain it for future use. Therefore, it tends to get more knowledgeable with experience and the results are expected to improve accordingly. It has two major advantages over traditional machine learning techniques. First, it doesn’t have to learn a concept from the scratch each time, when repeatedly found in multiple tasks. Second, the existence of past knowledge makes it easier for the model to learn new concepts, building on top of what it already know i.e., following the Matthew effect. Since, the constraints on storage and computational resources may not allow to retain the data for all the available tasks, LML based models retain crude knowledge rules that can be effectively retained and maintained. Each time, there is a new task to perform, it uses relevant past knowledge in support of the current task and the data for the current task to build a model. LML based models are particularly advantageous when some of the datasets have noise or limited representation.

An important aspect of LML models which make them very desirable for certain cases is their ability of never stopping to learn. This is particularly, when the model operates in a dynamic environment having its data coming from online or real-world sources. As the future is unpredictable and we cannot train a model for everything that we may expect in the future. This is where LML models overshadow other traditional ML based models.

Applications

- Autonomous cars cannot be trained for anything and everything they may expect on roads in different countries.

- A household robot cannot be taught the likes, dislikes of its owner, structure of their houses and position / working of various equipment.

- A virtual assistant cannot be equipped with everything it may expect in a conversation with a customer that may relate to the events occurring around.

Objectives

- To understand the concept of Lifelong machine learning and its working architecture.

- To introduce the research background in this area by referring to the problems addressed in the literature.

- To provide a platform for new researchers explore to this area and discuss the open issues that they may want to work on.

Target Audience

Our audience for this workshop would be graduate and post-graduate students who have studied machine learning course.